|

Phillip E. PopePhD StudentDepartment of Computer Science University of Maryland, College Park ude || tod || dmu || tod || sc (ta) epopep Google Scholar | GitHub | CV |

|

Phillip E. PopePhD StudentDepartment of Computer Science University of Maryland, College Park ude || tod || dmu || tod || sc (ta) epopep Google Scholar | GitHub | CV |

|

In September 2025 I am joining the Initiative for Computational Catalysis (ICC) at the Flatiron Institute as Research Fellow. My research is on machine learning (ML) for electronic structure. Specifically I work on speeding up electronic structure calculations through learning of initialization data. The long-term vision of my work is to accelerate the search for new molecules and materials such as catalysts. During my PhD I was advised by David Jacobs and Hong-Zhou Ye part of the Center for Machine Learning and the Institute for Advanced Computed Studies at the University of Maryland, College Park. My thesis was "Speeding up Density Functional Theory Calculations with Machine Learning: A Density Learning Approach". My previous work has spanned a number of other ML topics including robustness, data manifolds, explanability, and generative models. |

|

Bridging Quantum Chemistry and Machine Learning for a Greener Future UMIACS "What's New" - Nov. 25 2024, X/Twitter |

|

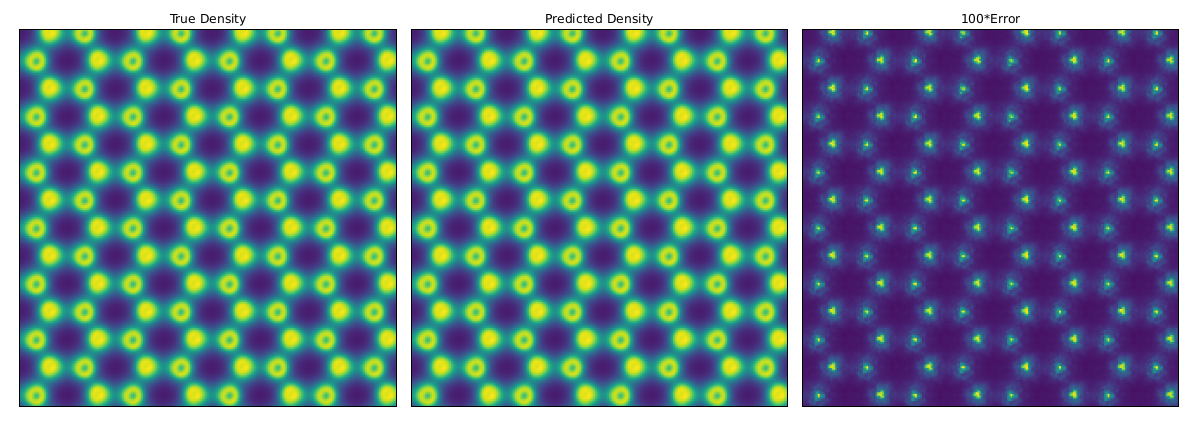

Towards Combinatorial Generalization for Catalysts: A Kohn-Sham Charge-Density Approach Pope P., Jacobs, D. Published at the Thirty-seventh Conference on Neural Information Processing Systems (Neurips 2023) VIDEO |

|

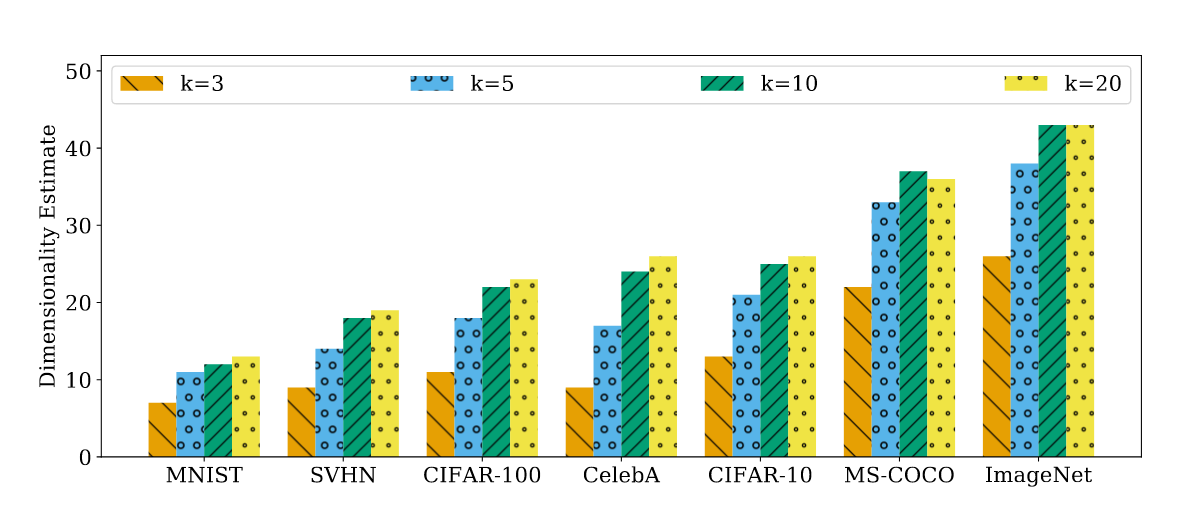

The Intrinsic Dimension of Images and Its Impact on Learning Pope P., Zhu C., Abdelkader, A., Goldblum, M., Goldstein, T. Published at The Tenth International Conference on Learning Representations (ICLR 2021) Awarded Spotlight Presentation (3.8% overall acceptance rate) VIDEO |

|

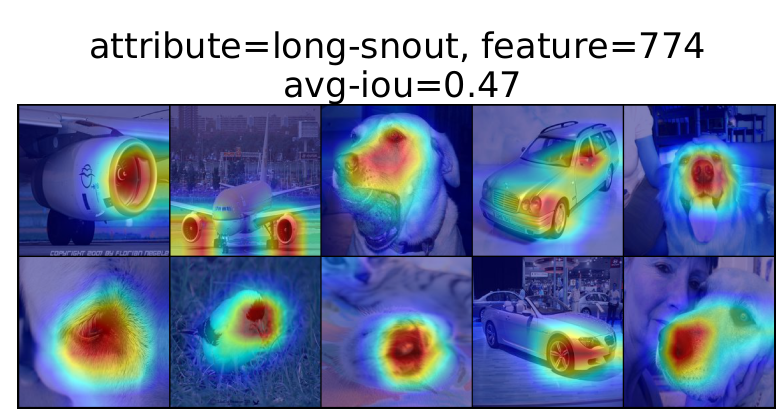

A Comprehensive Study of Image Classification Model Sensitivity to Foregrounds, Backgrounds, and Visual Attributes Moayeri, M., Pope, P., Balaji, Y., and Feizi, S. Published at the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2022) Awarded Oral Presentation (4.2% overall acceptance rate) |

|

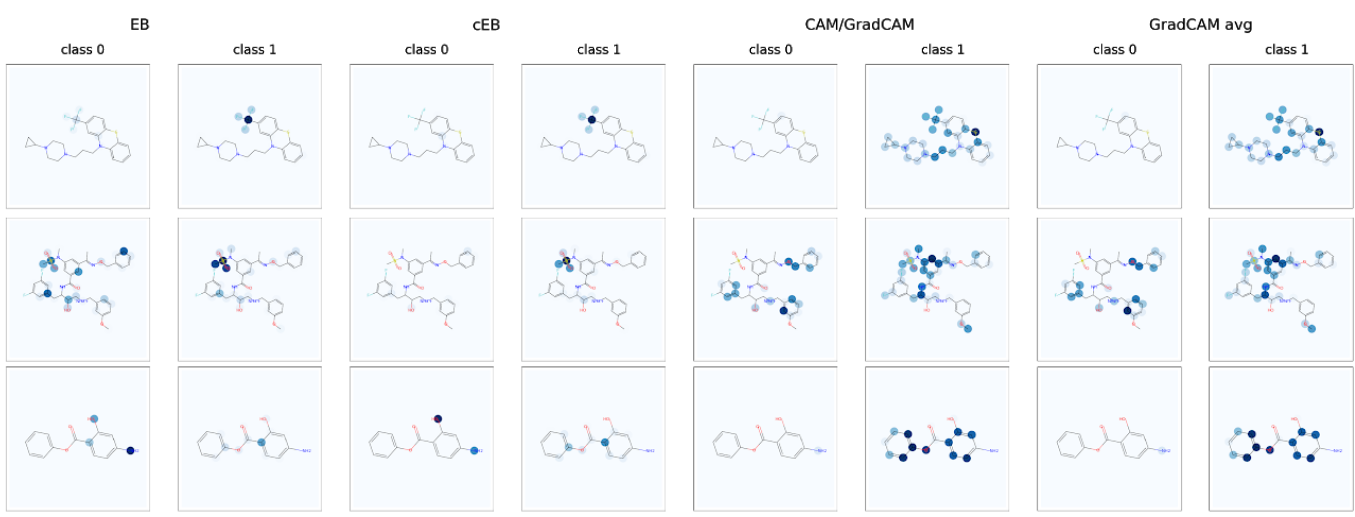

Explainability Methods for Graph Convolutional Neural Networks Pope, P.*, Kolouri, S.*, Rostrami, M., Martin, C., Hoffman, H. Published at the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2019) Awarded Oral Presentation (5.5% overall acceptance rate) VIDEO |

|

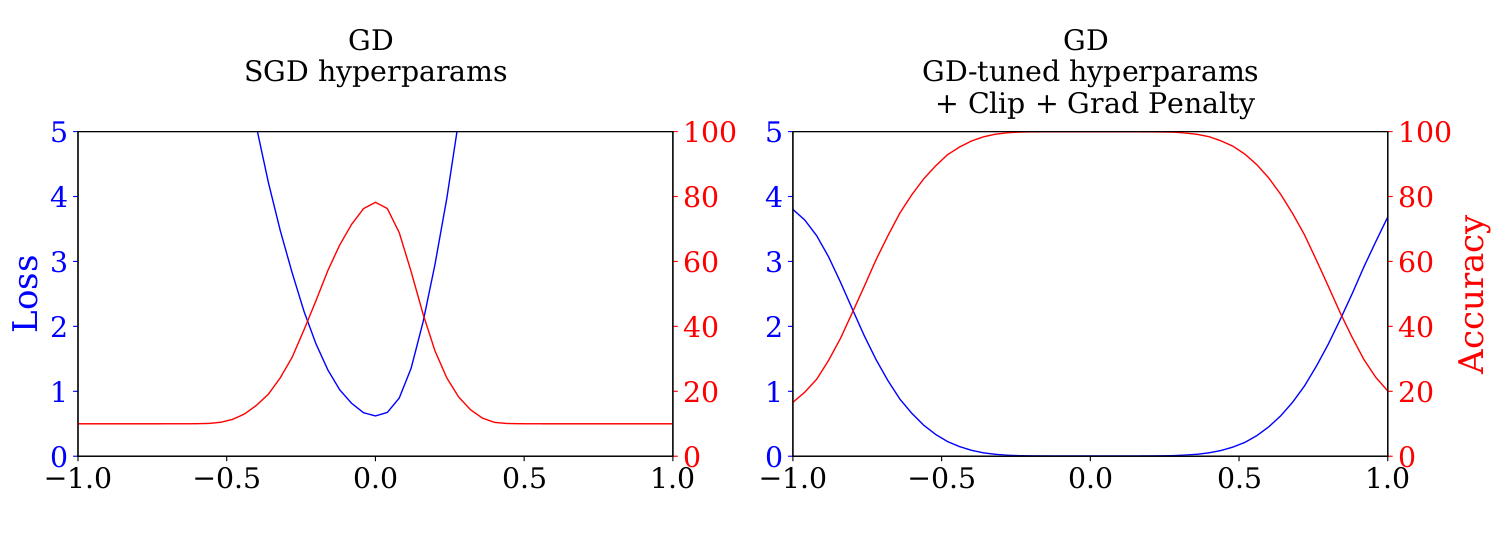

Stochastic Training is Not Necessary for Generalization Geiping, J., Goldblum, M., Pope, P., Moeller, M., and Goldstein, T. Published at The Eleventh International Conference on Learning Representations (ICLR 2022) |

|

Influence Functions in Deep Learning Are Fragile Basu, S.*, Pope, P.*, Feizi, S. Published at The Tenth International Conference on Learning Representations (ICLR 2021) |

|

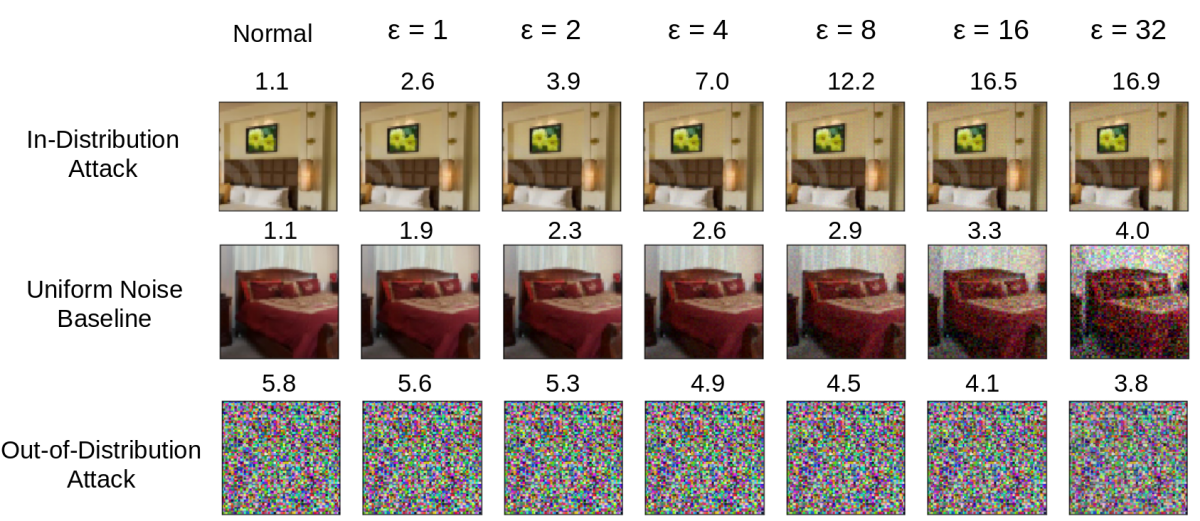

Adversarial Robustness of Flow-Based Generative Models Pope, P.*, Balaji, Y.*, Feizi, S. Published at The 23rd International Conference on Artificial Intelligence and Statistics (AISTATS 2020) |

|

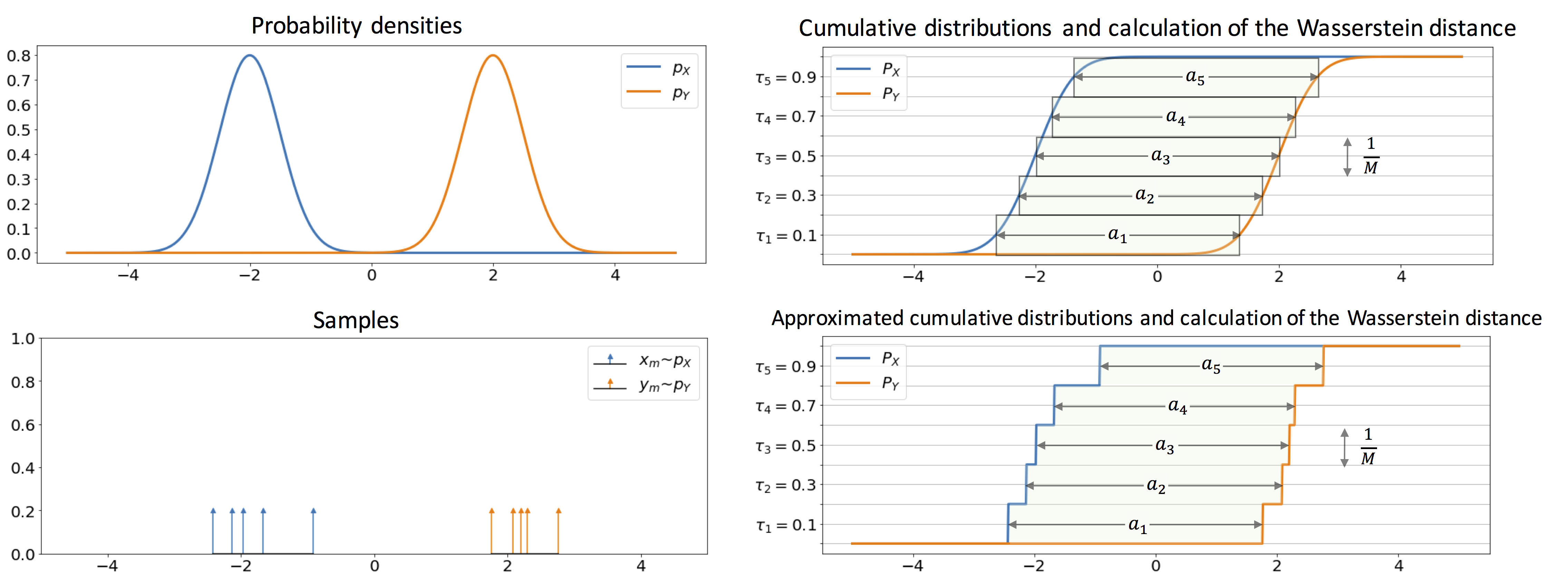

Sliced-Wasserstein Autoencoders Kolouri, S., Pope, P., Martin, C., Rohde, G. Published at The Eighth International Conference on Learning Representations (ICLR 2019) |